Ricochet is the best place on the internet to discuss the issues of the day, either through commenting on posts or writing your own for our active and dynamic community in a fully moderated environment. In addition, the Ricochet Audio Network offers over 50 original podcasts with new episodes released every day.

The Emperor’s New Mind

The Emperor’s New Mind

Mathematical truth is not a horrendously complicated dogma whose validity is beyond our comprehension. -Sir Rodger Penrose

The Emperor’s New Mind is Sir Roger Penrose’s argument that you can’t get a true AI by merely piling silicon atop silicon. To explain why he needs a whole book in which he summarizes most math and all physics. Even for a geek like me, someone who’s got the time on his hands and a fascination with these things it gets a bit thick. While delving into the vagaries of light cones or the formalism of Hilbert space in quantum mechanics it’s easy to wonder “wait, what does this have to do with your main argument?” Penrose has to posit new physics in order to support his ideas, and he can’t explain those ideas unless you the reader have a sufficient grasp of how the old physics works. Makes for a frustrating read though.

What defines a sufficient grasp of physics depends strongly on whether or not you are mortality challenged.

The trouble is that even when you’ve done it that way you’re going to miss antecedent arguments. In his chapter discussing the lifetimes of black holes (it involves a lot of just sitting there) he makes an argument about the nature of phase space, which refers back to a theorem he introduced in his review of mathematics. But he offered no proof of the theorem. Was the review not thorough enough? To be thorough enough he’d have to give you the whole education, and you’d walk out of this book with at least a pair of bachelor’s degrees. And, let’s be honest, Penrose makes a better researcher than professor. His ideas are top notch. His explanations of them could use some work.

Knowing the impossibility of covering all the premises, and that I’m writing a Ricochet post and not a tome myself, I’m going to run through the argument backwards here, starting with the conclusion and then describing the antecedents that you need to make it work. I implicitly cede the ground of persuading you that it’s true, but that’s fine, because I’m not persuaded myself. I hope it makes for a cleaner understanding of the argument.

A computer program on a silicon wafer will never be intelligent in the same way that a human brain is.

That’s his conclusion right there. So far so good. That’s the premise for the book, if I didn’t want to hear about that I wouldn’t have picked it up in the first place.

To understand that, we need to know how a human brain’s intelligence differs from that of a computer program. A human brain is conscious, and a computer program is algorithmic.

Okay, we’re going to need to know what algorithmic means, and we’re going to need to know what it means to be conscious. The first is easier to define than the latter. A process may be said to be algorithmic if you can reach the conclusion of the process by means of a well defined series of steps. If you follow the rules for doing long division correctly you’ll get the correct answer, even if you’re not thinking about it particularly hard. Computers are algorithmic; physically you’ve got a lot of transistors switching on and off.

A heap of transistors turning themselves on or off turns a binary 0011 into the number three on your digital watch. Neat!

Consciousness is a non-algorithmic process.

Okay, that one’s a leap, and Penrose identifies it as such in the text. One of the major problems in this whole debate is what exactly it means to be conscious; the word remains in a “I knows it when I sees it” category. But let me back the argument down one more step before I keep going.

There exist non-algorithmic processes.

Wait, what does that mean, exactly? A process is non-algorithmic if there’s no algorithm we can use to get a correct answer. That doesn’t mean we need a good algorithm, or an algorithm that’ll even get the job done in any sort of practical time frame, but that in principle there exists a predictable way to get at the answer. Take password cracking as an example; a brute force attack (guess every possible password until you hit the right one) works, even if in practice it’s infeasible to guess a long enough password. On the flip side, what if you only get ten guesses and have to stop? The FBI ran into that problem with the terrorist’s iPhone after he died in the San Bernardino attack. There’s no process you can use that will guarantee a correct guess within the first ten attempts. (The FBI eventually got the phone unlocked, but though they won’t say how I can almost guarantee it wasn’t by guess and check.)

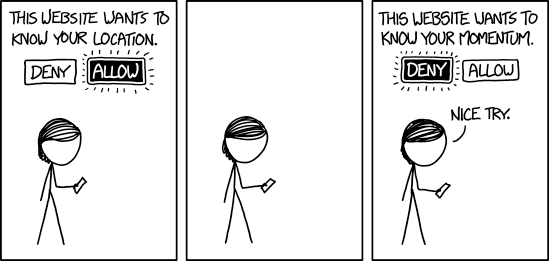

For more discussion of what’s an algorithm and why, please click on the picture. It’s not even a RickRoll!

The book’s primary example of a non-algorithmic process is the halting problem. A Turing machine (you could read “Turing machine” as “computer program” if you’re unfamiliar with the concept) may or may not stop. If you’ve ever watched that little hourglass turn itself upside down just hoping that you’ll get a chance to save your work before everything goes kablooie then you can understand why the halting problem is important.

It can be proven (it is proven in the book; I don’t intend to go all the way down the logic chain here) that there’s no general solution to the halting problem. But sometimes we can look at a program running and tell that it’s not going to stop. Does that mean that we have a better algorithm in our brain, one that detects stalled processes where our computers don’t, or does that mean that our brain is doing something else?

This also relates to Gödel’s incompleteness theorem, also discussed in the text. Gödel proved that, for any set of axioms, there exist true propositions which nevertheless can’t be proven by means of those axioms. But the proof relies on the use of intuition to observe that something which is obviously true is in fact true. Does the fact that we see that imply the brain isn’t just a computer running on neurons rather than transistors? That depends on how a brain works, doesn’t it? We’d better look at the other half of the proposition.

The brain may function on non-computable principles

Penrose is explicit in saying that certain functions of the brain, things like intuition and insight, are what define consciousness, and that they aren’t algorithmic processes. But to say that he needs to come up with a mechanism in the brain that allows our protoplasm to solve non-algorithmic problems.

Here Penrose is on as shaky ground as anywhere in the argument. You can tell he’s less confident talking about brain structures than he was about quantum mechanics, though I don’t think we can blame a physicist for that. But even if he was dogmatically correct on all the points of brain architecture as it was known to science, this book is thirty-some years old now. I do not believe the science has stood still in that time. Penrose identifies the growth and retraction of certain nerve connections as a potentially non-algorithmic process which could be a basis for consciousness that’s fundamentally different from the Turing machines you’re reading this on.

As an aside here, his description of chess programs is still accurate. Though the year 1989 did not yet have programs that could beat grandmasters and today I can go onto YouTube and watch a machine-learning program pants a traditional chess program (which in turn can outplay any human) the essentials of the argument hold up.

Penrose has to posit new physical theories in order to allow his brain’s nerve growth to be non-algorithmic. Almost paradoxically he’s on firmer ground when he does so. Penrose is an independent thinker and a career physicist and mathematician. If you asked me to list of the people on the planet most likely to revolutionize physics his name would be at the top of the list. (Perhaps not anymore; the man’s ninety. He has my permission to retire.) Penrose proposes that a new theory of quantum gravity may be coming, which in addition to resolving the conflict between relativity and quantum mechanics (the two great theories of the earlier part of last century) will resolve the hazy border between classical and quantum mechanics.

Let me just pause for a moment on that. According to the standard quantum theory you have a particle, and that particle has a wave function that describes it. The wave function evolves in a perfectly predictable manner until you measure it, in which case it collapses from a range of probabilities into one actuality. At which point the wave function starts evolving again. But what does it mean to take a measurement? At what point does a quantum level particle say “Oops! Light’s on! Everyone act normal.”?

Penrose posits a future physics where the difference between the quantum and the classical world depends on gravity. Once a quantum effect gets large enough, affecting enough mass, that (quantized) gravity jumps from zero to one unit then the wave function collapses and one probability is selected out of those available. Really, the bulk of the book is background so you can make sense of how that’s all supposed to work.

Now, we’re ready to march back up the logic chain. If there exists a future physics with (as he asserts) non-computable aspects, then perhaps that future physics explains nerve connection growth, and perhaps that allows the brain to manage things like flashes of insight, things that plodding, algorithmic silicon seems unlikely to produce.

Perhaps.

Actually, I find his ideas about where physics might lead to be plausible. Certainly the idea of quantized gravity leading to the collapse of wave functions is elegant enough that it might very well be true. As to the actual structures of the brain, maybe I’m being too harsh. If Penrose came from a physics background, well, so did I, and he at least did his homework on this stuff. Really his best evidence for his view comes from the nature of insight, and surely the assertion that it can’t be produced by any sufficiently complicated algorithm is as plausible as the assertion that it can because we simply don’t know what insight is or how it happens.

It takes four hundred and fifty pages to get this far. Along the way Penrose manages to hit on just about every topic in physics and mathematics. (I think he missed topology, but I’m not positive.) I find myself reacting to the book much like I did to Moby Dick; it’s a great opportunity to learn something, so long as you’re not too specific about what you’re learning. If you just let the whale take care of itself and you sit back and enjoy the essays on the whaling trade you can have a good time with that book. If all you want is the action, well, prepare to skip a number of chapters. And if you’re in it for the sea shanties I’ve got the hookup right here:

Was this book what I was looking for? No, not really. It is my conviction that the nature of the human brain builds certain blind spots into our perception of reality, and that any true picture of the world and how it works requires us to at least try to peek around the edges of those blind spots. I came in looking for evidence either for or against that position, and to see if I could infer some of the limits of those blind spots. That’s not what I found here. But if I did not read what I wanted to know, I read plenty that I ought to know.

Published in Science & Technology

AI has a lot in common with a camera. It can’t create the scene. It can only record what’s already there.

AI cannot create thoughts. It never will.

/Users/kentlyon/Downloads/Noesis.pdf

Above is a link to a text you can peruse, now that you’ve struggled with Penrose’s expositions on Mind. Good luck. Feedback appreciated. Thanks.

The link defaults to ricochet.com/users/kentlyon/ yada yada. Quoth the server 404.

What about when you have a machine learning situation? You ‘train’ a machine by feeding it a large data set, ask it to draw its own conclusions (much like Clavius’ Hadoop data lake. Okay, mostly I wanted to say ‘Hadoop’; it’s a fun word.), and see what you can get out of it. I’m missing a great deal of the details but there have been some impressive successes in that area. AlphaFold, a google machine learning algorithm (related to the chess playing one I mentioned in the post), has managed to solve the protein folding problem, which wasn’t something that human intelligence had been able to manage before.

Okay, but a human mind conceived of the program, and implemented it, and only then let it grow on it’s own. But (again, assuming a thoroughly materialistic worldview) how does that differ from giving birth to a child? Are we to suppose that the child is not intelligent because the parents gave rise to it?

To flip things the other way, it is my personal belief (not based in any fact I can muster) that if we do manage to produce a true silicon intelligence it will be because God has ensouled it.

Or perhaps it is inhabited by other spiritual creatures. Perhaps who are looking to inhabit a body once again.

In the heyday of the 60s, AI researchers were informally referred to as “neats” or “scruffys”. The Neats believed that you could work your way to consciousness by a higher and higher level set of formal rules; this lent itself to the conventional von Neumann-birthed mainframes available to them. The Scruffys were a mixed, irregular lot–hey, the name alone is a tipoff–and many ended up as connectionists, believers in the power of neural networks to teach themselves. At that time, neural networks were created on specialized hardware, but over time it became accepted practice to emulate x number of perceptrons in standard hardware. The connectionists appeared to be a cult, and there were definite limitations to what they could do, but inside of those limitations, they could do a whole lot, and neural network training is now as normal–and as tasty and valued!–as apple pie.

The intelligence is in the conception of the problem that we give the computer to solve. It’s in the recognition that there is a problem to solve. Creation and design and motivation are the parts of human intelligence that cannot be replicated by artificial intelligence.

A child is not born a blank slate. The child has innate emotion and motivation and creativity that direct his or her mind.