Ricochet is the best place on the internet to discuss the issues of the day, either through commenting on posts or writing your own for our active and dynamic community in a fully moderated environment. In addition, the Ricochet Audio Network offers over 50 original podcasts with new episodes released every day.

Cargo Cult Science

Cargo Cult Science

The first principle [of science] is that you must not fool yourself — and you are the easiest person to fool. –– Richard Feynman, from his 1974 commencement address at Caltech

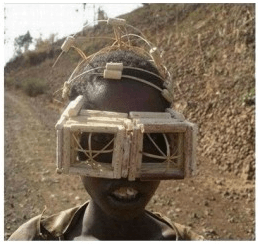

South Sea Island Infrastructure Project

In 1974, Richard Feynman gave the commencement address at Caltech, in which he cautioned the audience to understand and be wary of the difference between real science and what he called “Cargo Cult” science. The lecture was a warning to students that science is a rigorous field that must remain grounded in hard rules of evidence and proof. Feynman went on to explain to the students that science is extremely hard to get right, that tiny details matter, that it is always a struggle to make sure that personal bias and motivated reasoning are excluded from the process.

It’s not the good will, the high intelligence, or the expertise of scientists that makes science work as the best tool for discovering the nature of the universe. Science works for one simple reason: It relies on evidence and proof. It requires hypothesis, prediction, and confirmation of theories through careful experiment and empirical results. It requires excruciating attention to detail, and a willingness to abandon an idea when an experiment shows it to be false. Failure to follow the uncompromising rules of science opens the door to bias, group-think, politically-motivated reasoning, and other failures.

Science is the belief in the ignorance of experts. — Richard Feynman

As an example of how unconscious bias can influence even the hardest of sciences, Feynman recounted the story of the Millikan Oil Drop Experiment. The purpose of the experiment was to determine the value of the charge of an electron. This was a rather difficult thing to measure with the technology of the time, and Millikan got a result that was just slightly too high due to experimental error — he used the wrong value for the viscosity of air in his calculations. This was the result that was published.

Now, a slightly incorrect result is not a scandal — it’s why we insist on replication. Even the best scientists can get it wrong once in awhile. This is why the standard protocol is to publish all data and methods so that other scientists can attempt to replicate the results. Millikan duly published his methods along with the slightly incorrect result, and others began doing oil drop experiments themselves.

As others published their own findings, an interesting pattern emerged: The first published results after Millikan’s were also high – just not quite as much. And the next generation of results were again too high, but slightly lower than the last . This pattern continued for some time until the experiments converged on the true number.

Why did this happen? There was nothing about the experiment that should lead to a consistently high answer. If it was just a hard measurement to make, you would expect experimental results to be randomly distributed around the real value. What Feynman realized was that psychological bias was at work: Millikan was a great scientist, and no one truly expected him to be wrong. So when other scientists found their results were significantly different from his, they would assume that they had made some fundamental error and throw the results out. But when randomness in the measurement resulted in a measurement closer to Millikan’s, they assumed that it was a better result. They were filtering the data until the result reached a value that was at least close enough to Millikan’s that the error was ‘acceptable’. And then when that result was added to the body of knowledge, it made the next generation of researchers a little more willing to settle on an even smaller, but still high result.

Note that no one was motivated by money, or politics, or by anything other than a desire to be able to replicate a great man’s work. They all wanted to do the best job they could and find the true result. They were good scientists. But even the subtle selection bias caused by Millikan’s stature was enough to distort the science for some time.

The key thing to note about this episode is that eventually they did find the real value, but not by relying on the consensus of experts or the gravitas and authority of a great scientist. No, the science was pulled back to reality only because of the discipline of constant testing and because the scientific question was falsifiable and experimentally determinable.

Failure to live up to these standards, to apply the rigor of controlled double-blind tests, predictions followed by tests of those predictions and other ways of concretely testing for the truth of a proposition means you’re not practising science, no matter how much data you have, how many letters you have after your signature, or how much money is wrapped up in your scientific-looking laboratory. At best, you are practising cargo-cult science, or as Friedrich Hayek called it in his Nobel speech, ‘scientism’ – adopting the trappings of science to bolster an argument while at the same time ignoring or glossing over the rigorous discipline at the heart of true science.

This brings us back to cargo cults. What is a cargo cult, and why is it a good metaphor for certain types of science today? To see why, let’s step back in time to World War II, and in particular the war against Japan.

The Pacific Cargo Cults

During World War II, the allies set up forward bases in remote areas of the South Pacific. Some of these bases were installed on islands populated by locals who had never seen modern technology, who knew nothing of the strange people coming to their islands. They watched as men landed on their island in strange steel boats, and who then began to cut down jungle and flatten the ground. To the islanders, it may have looked like an elaborate religious ritual.

In due time, after the ground was flat and lights had been installed along its length, men with strange disks over their ears spoke into a little box in front of their mouths, uttering incantations. Amazingly, after each incantation a metal bird would descend from the sky and land on the magic line of flat ground. These birds brought great wealth to the people – food they had never seen before, tools, and medicines. Clearly the new God had great power.

Years after the war ended and the strange metal birds stopped coming, modern people returned to these islands and were astonished by what they saw; ‘runways’ cut from the jungle by hand, huts with bamboo poles for antennas, locals wearing pieces of carved wood around their ears and speaking into wooden ‘microphones’, imploring the great cargo god of the sky to bring back the metal birds.

Ceremony for the new Tuvaluan Stimulus Program

Understand, these were not stupid people. They were good empiricists. They painstakingly watched and learned how to bring the cargo birds. If they had been skilled in modern mathematics, they might even have built mathematical models exploring the correlations between certain words and actions and the frequency of cargo birds appearing. If they had sent explorers out to other islands, they could have confirmed their beliefs: every island with a big flat strip and people with devices on their heads were being visited by the cargo birds. They might have found that longer strips bring even larger birds, and used that data to predict that if they found an island with a huge strip it would have the biggest birds.

Blinded with Science

There’s a lot of “science” that could have been done to validate everything the cargo culters believed. There could be a strong consensus among the most learned islanders that their cult was the ‘scientific’ truth. And they could have backed it up with data, and even some simple predictions. For example, the relationship between runway length and bird size, the fact that the birds only come when it’s not overcast, or that they tended to arrive on a certain schedule. They might even have been able to dig deeply into the data and find all kinds of spurious correlations, such as a relationship between the number of birds on the ground and how many were in the sky, or the relationship between strange barrels of liquid on the ground and the number of birds that could be expected to arrive. They could make some simple short-term predictions around this data, and even be correct.

Then one day, the predictions began to fail. The carefully derived relationships meticulously measured over years failed to hold. Eventually, the birds stopped coming completely, and the strange people left. But that wasn’t a problem for the island scientists: They knew the conditions required to make the birds appear. They meticulously documented the steps taken by those first strangers on the island to bring the birds in the first place, and they knew how to control for bird size by runway length, and how many barrels of liquid were required to entice the birds. So they put their best engineers to work rebuilding all that with the tools and materials they had at hand – and unexpectedly failed.

How did all these carefully derived relationships fail to predict what would happen? Let’s assume these people had advanced mathematics. They could calculate p-values, do regression analysis, and had most of the other tools of science. How could they collect so much data and understand so much about the relationships between all of these activities, and yet be utterly incapable of predicting what would happen in the future and be powerless to control it?

The answer is that the islanders had no theory for what was happening, had no way of testing their theories even if they had had them, and were hampered by being able to see only the tiniest tip of an incredibly complex set of circumstances that led to airplanes landing in the South Pacific.

Imagine two island ‘scientists’ debating the cause of their failure. One might argue that they didn’t have metal, and bamboo wasn’t good enough. Another might argue that his recommendation for how many fake airplanes should be built was ignored, and the fake airplane austerity had been disastrous. You could pore over the reams of data and come up with all sorts of ways in which the recreation wasn’t quite right, and blame the failure on that. And you know what? This would be an endless argument, because there was no way of proving any of these propositions. Unlike Millikan, they had no test for the objective truth.

And in the midst of all their scientific argumentation as to which correlations mattered and which didn’t, the real reason the birds stopped coming was utterly opaque to them: The birds stopped coming because some people sat on a gigantic steel ship they had never seen, anchored in the harbor of a huge island they had never heard of, and signed a piece of paper agreeing to end the war that required those South Pacific bases. And the signing itself was just the culmination of a series of events so complex that even today historians argue over it. The South Sea Islanders were doomed to hopeless failure because what they could see and measure was a tiny collection of emergent properties caused by something much larger, very complex and completely invisible to them. The correlations so meticulously collected were not describing fundamental, objective properties of nature, but rather the side-effects of a temporary meta-stability of a constantly changing, wholly unpredictable and wildly complex system.

The Modern Cargo Cults

Today, entire fields of study are beginning to resemble a form of modern cargo cult science. We like to fool ourselves into thinking that because we are modern, ‘scientific’ people that we could never do anything as stupid as the equivalent of putting coconut shells on our ears and believing that we could communicate with metal birds in the sky through them. But that’s exactly what some are doing in the social sciences, in macroeconomics, and to some extent in climate science and in some areas of medicine. And these sciences share a common characteristic with the metal birds of the south sea cargo cults: They are attempts to understand, predict, and control large complex systems through examination of their emergent properties and the relationships between them.

No economist can hope to understand the billions of decisions made every day that contribute to change in the economy. So instead, they choose to aggregate and simplify the complexity of the economy into a few measures like GDP, consumer demand, CPI, aggregate monetary flows, etc. They do this so they can apply mathematics to the numbers and get ‘scientific’ results. But like the South Sea islanders, they have no way of proving their theories and a multitude of competing explanations for why the economy behaves as it does with no objective way to solve disputes between them. In the meantime, their simplifications may have aggregated away the information that’s actually important for understanding the economy.

You can tell that these ‘sciences’ have gone wrong by examining their track record of prediction (dismal), and by noticing that there does not seem to be steady progress of knowledge, but rather fads and factions that ebb and flow with the political tide. In my lifetime I have seen various economic theories be discredited, re-discovered, discredited once more, then rise to the top again. There are still communist economics professors, for goodness’ sake. That’s like finding a physics professor who still believes in phlogiston theory. And these flip-flops have nothing to do with the discovery of new information or new techniques, but merely by which economic faction happens to have random events work slightly in favor of their current model or whose theories give the most justification for political power.

As Nate Silver pointed out in his excellent, “The Signal and the Noise,” economists’ predictions of future economic performance are no better than chance once you get away from the immediate short term. Annual surveys of macroeconomists return predictions that do no better than what you’d get throwing darts at a dartboard. When economists like Christina Romer have the courage to make concrete predictions of the effects of their proposed interventions, they turn out to be wildly incorrect. And yet, these constant failures never seem to falsify their underlying beliefs. Like the cargo cultists, they’re sure that all they need to do is comb through the historical patterns in the economy and look for better information, and they’ll surely be able to control the beast next time.

Other fields in the sciences are having similar results. Climate is a complex system with millions of feedbacks. It adapts and changes by its own rules we can’t begin to fully grasp. So instead we look to the past for correlations and then project them, along with our own biases, into the future. And so far, the history of prediction of climate models is very underwhelming.

In psychology, Freudian psychoanalysis was an unscientific, unfalsifiable theory based on extremely limited evidence. However, because it was being pushed by a “great man” who commanded respect in the field, it enjoyed widespread popularity in the psychology community for many decades despite there being no evidence that it worked. How many millions of dollars did hapless patients spend on Freudian psychotherapy before we decided it was total bunk? Aversion therapy has been used for decades for the treatment of a variety of ills by putting the patient through trauma or discomfort, despite there being very little clinical evidence that it works. Ulcers were thought to have been caused by stress. Facilitated communication was a fad that enjoyed widespread support for far too long.

A string of raw facts; a little gossip and wrangle about opinions; a little classification and generalization on the mere descriptive level; a strong prejudice that we have states of mind, and that our brain conditions them: but not a single law in the sense in which physics shows us laws, not a single proposition from which any consequence can causally he deduced. This is no science, it is only the hope of a science.

— William James, “Father of American psychology”, 1892

These fields are adrift because there are no anchors to keep them rooted in reality. In real science, new theories are built on a bedrock of older theories that have withstood many attempts to falsify them, and which have proven their ability to describe and predict the behavior of the systems they represent. In cargo cult sciences, new theories are built on a foundation of sand — of other theories that themselves have not passed the tests of true science. Thus they become little more than fads or consensus opinions of experts — a consensus that ebbs and flows with political winds, with the presence of a charismatic leader in one faction or another, or with the accumulation of clever arguments that temporarily outweigh the other faction’s clever arguments. They are better described as branches of philosophy, and not science — no matter how many computer models they have or how many sophisticated mathematical tools they use.

In a cargo cult science, factions build around popular theories, and people who attempt to discredit them are ostracised. Ad hominem attacks are common. Different theories propagate to different political groups. Data and methods are often kept private or disseminated only grudgingly. Because there are no objective means to falsify theories, they can last indefinitely. Because the systems being studied are complex and chaotic, there are always new correlations to be found to ‘validate’ a theory, but rarely a piece of evidence to absolutely discredit it. When an economist makes a prediction about future GDP or the effect of a stimulus, there is no identical ‘control’ economy that can be used to test the theory, and the real economy is so complex that failed predictions can always be explained away without abandoning the underlying theory.

There is currently a crisis of non-reproducibility going on in these areas of study. In 2015, Nature looked at 98 peer-reviewed papers in psychology, and found that only 39 of them had results that were reproducible. Furthermore, 97 percent of the original studies claimed that their results were statistically significant, while only 36 percent of the replication studies found statistically significant results. This is abysmal, and says a lot about the state of this “science.”

This is not to say that science is impossible in these areas, or that it isn’t being done. All the areas I mentioned have real scientists working in them using the real methods of science. It’s not all junk. Real science can help uncover characteristics and behaviors of complex systems, just as the South Sea Islanders could use their observations to learn concrete facts such as the amount of barrels of fuel oil being an indicator of how many aircraft might arrive. In climate science, there is real value to be had in studying the relationships between various aspects of the climate system — so long as we recognize that what we are seeing is subject to change and that what is unseen may represent the vast majority of interactions.

The complex nature of these systems and our inability to carry out concrete tests means we must approach them with great humility and understand the limits of our knowledge and our ability to predict what they will do. And we have to be careful to avoid making pronouncements about truth or settled science in these areas, because our understanding is very limited and likely to remain so.

Published in GeneralScience alone of all the subjects contains within itself the lesson of the danger of belief in the infallibility of the greatest teachers of the preceding generation.

— Richard Feynman

You should have stuck to your guns. Your first answer, 80 ± 12, is better than your second one.

This is exactly what happened in Obama’s first term. They had economists models that you would input spending here or there and the model would spit out a percent increase in GDP or a certain decrease in unemployment.

Of course, none of it came to be.

This may be the best thing I’ve read on Ricochet so far.

Friedrich von Hayek addressed the problem of the limits to knowledge in his Nobel Prize lecture. He was an economist so he probably knew something about the issue.

…

drlorentz #63: My statement was oral, and my tone was politely sardonic, in case that helps.

I have tried and failed to make this point many times. Now I can just point to this. Thank you.

Dan, I have often tried to convey the same thoughts, but never managed a tenth of your clarity and eloquence. This is a superb essay. Many thanks.

What were later scientists wrong about? Were they really not willing to contradict the great scientist, or did they merely assume that a secondary (and unrelated hypothesis about the viscosity of air) was correct? The question is important, because even without “unconscious bias” no scientific experiment can falsify any theory by itself, because every experiment depends on many auxiliary hypotheses (See Duhem and Quine).

Actually, he didn’t. That is the whole joke: genetics are nowhere near as neat and tidy as Mendel thought.

Best Comments on Ricochet Ever. My Ricochet Cult Opinion.

He got the right answer for the traits he selected. The problem was that Mendel’s results did not show enough statistical scatter. Genetics for humans might be messy but for peas Mendel was right on the money. See this, and references cited therein.

This is so, so great! I need to send it around everywhere! I’ve been so dismayed by the Salem Witch Trial atmosphere of today’s “science” — if you are not a believer, then you are evil! “Believer????” It isn’t about belief. Sigh. I’m so grateful you wrote this!

Perhaps I’m a bit more cynical than you, JimGoneWild, as I always figured that the projected outcomes of the “stimulus” were merely cover for the Dems shoveling out $1T to their favored constituencies. They would have come up with those numbers no matter what models they used.

I really appreciate, though, your insight into the generalizations made with Keynsian modeling. Correlations from the past are nothing more than that.

What a great post. Thank you, Mr. Hanson.

Dan,

Just wanted to add to the above. Fantastic piece. Best thing I’ve read in a while.

No, no one can be more cynical than me .. except most of the Ricochet bloggership. :0)

Yes, the $1T made for a nice Obama slush fund. [And with extreme cynicism]I’m sure the models came up with correct answers only after inserting a high enough input amount.

Reminds me of a joke that I think Reagan made at the expense of economists dismissing his tax reforms. I’m paraphrasing here, but I think the quip went something along the lines of:

There is an underlying reaction with so many scientists and engineers that they will refuse to even test things if they do not believe they will work. This is not necessarily unreasonable as some things are prima facia impossible, but it does mean that often even insightful new approaches and concepts are dismissed because people are conditioned to accept things as impossible merely because they have never been tried.

I’ve argued Reagan’s tax rate reduction with liberals. When I say something like, “Lowering tax rates doubled tax revenues to the Treasury.” They come back with “Yes, but it increased the federal deficit.”

Your comeback is, “The Democrats controlled Congress and kept increasing spending.” If tax cuts increased revenue, the deficit would have decreased if spending were held constant.

Well, sure. That’s my point. I was pointing out how ironic their thinking is. It’s like ..

Honey, I doubled my salary.

Yes, Dear. And now we’re in debt, Damn you!

Ironic doesn’t begin to describe it. I can think of other words but they’re not CoC compliant.

I am with you I don’t have issues with a lot of Climate Science studying existing phenomenons. It is the long-term predictive modeling sub-field in climate science that is junk science. There is some usefulness in the short term predictive modeling because it gets is right more than it gets its wrong. However even with Hurricane modeling I actual think that is a waste if time and I live in Hurricane Alley. (I lived about 50 miles from were the three eyes of the 2004 Florida Hurricanes crossed paths.)

It is useful for a few days forecast so I can plan because a it gives higher probability of whose going to get hit than plane guessing. However anything more than a few days is close to junk science or outright junk science as far as I am concerned.

A fun demonstration of systems with multiple variables:

I’ve seen this used in relation to discussions of complex predictions like weather. 1 pendulum is an easy calculation, but add a second and predictability goes right out the window.

And another

The main purpose of buliding LIGO and similar instruments was not to test GR. As the LIGO folks like to say, the purpose is to open a new window on the universe. LIGO is an observatory, not a one-shot test of GR. I doubt that NSF would have funded it if the principal justification were to add yet one more test to the list, especially since Hulse and Taylor had already done the basic job. They got a Nobel Prize for their trouble.

A major objection to string theory is, and has been all along, that the there are no testable predictions. This was never the case with GR, and furthermore the observations of Mercury’s orbit were already extant before the theory was published.

I want to say great aritcal. This subject Russ Roberts on Econ Talk has over the years really tried to communicate to his audience across multiple fields. To me that is what I have gotten most out of his podcast series since I due Financial Planning and some estimating for a living.

I am someone who geeks out about having fairly accurate data-sets with enough somewhat homogeneous data points, were I can due real statistical modeling. It is so rare because it is expensive and a complex process to due in the industries I have worked.

What I have found is the complete opposite when it comes to out lying data points; that is what you look for because that is what really tells you what you need to look into. Either there is a mistake in the data collecting process that needs to be fixed (that can be impacting a lot more of your data set), or the result is valid and you need to know what the caveat/exceptions to the data is. That is the outlying data points are the low hanging fruit when it comes to further investigation.

When it comes to using data to measure human behavior it is actually more important knowing what the data does not tell you. There are to many people extrapolating (myself included) conclusions about what the data is saying. You have to due it because you have limited time and resources and have to make decisions based on the data you have because you can’t wait around. However you end up being wrong a lot. We keep doing it because in most cases the benefit when you are close to right or right out-ways the cost when you are wrong.

So my point is, maybe it is different when studying natural phenomenons but you should never throw out outlying data you need to investigate it. That is proper science in my mind because that outlying data could just disprove your hypothesis. It has happened to me on more than one occasion. When you learn the data well enough it gets to the point you can 90%+ of the time know exactly what happened in operations when you get that outlying data. You actually don’t have to go on the floor to ask and due root cause analysis because you have seen this outlying data before.

First, kill all the butterflies.

Even before the lawyers?

Add a second pendulum and nonlinearity. That’s why the pendulum is started at the top. Notice that things become quite predictable towards the very end (after about 1:30) because the system becomes approximately linear. Energy is exchanged between the two parts at a regular rate that’s easy to compute. You need complexity and nonlinearity to get chaos. The climate system is complex and nonlinear.

Help me out in an argument with a family member: is a chaotic system, by definition, impossible to accurately (quantitatively) model so as to precisely predict the future?

That is a tough decision :-)